As I sit here watching the ball drop on New Year’s Eve and watching the TV news of the world in 2018 retrospectives, I’m struck that we should let you know what’s happening with AudioKit. It’s been a banner year! Where to start?

We started the year on AudioKit 4.0 and while there have been many updates, we’ve also managed to keep the API fairly stable and so we’ve only grown to AudioKit 4.5. Believe it or not, that’s actually more a point of pride than zooming through versions like we did early on.

But, that’s about the only small news we had in 2018, everything else was huge. I can’t believe that all this happened this year:

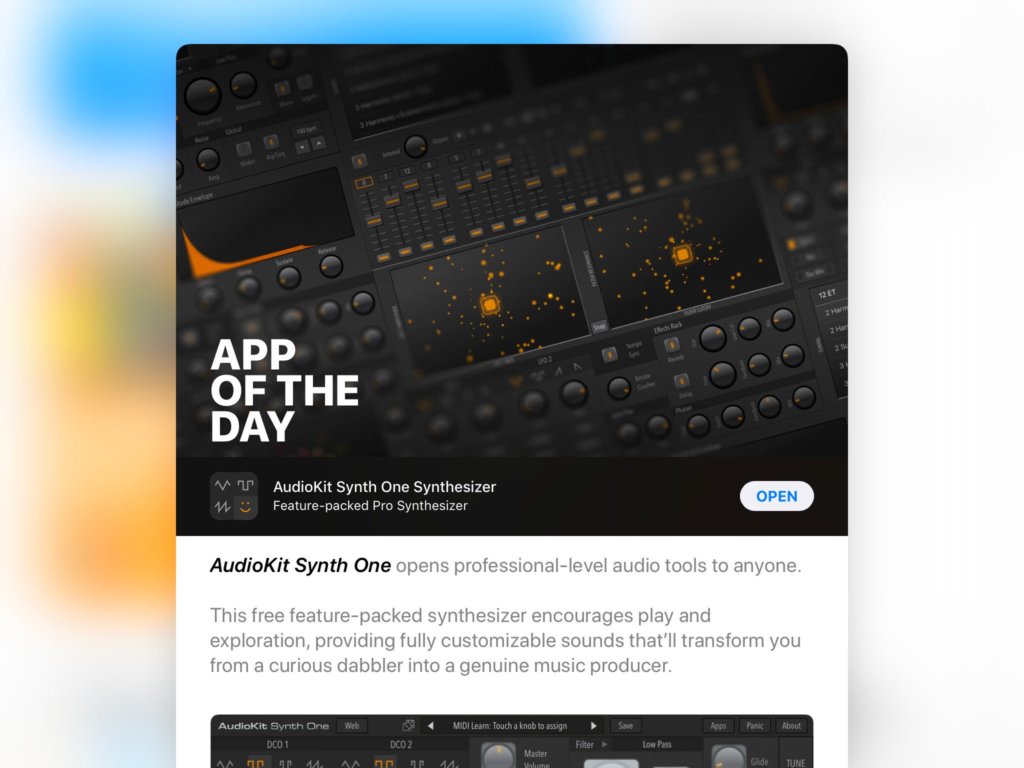

- Our free, open-source synthesizer AudioKit Synth One was released in June. And, it became one of the most downloaded iPad synths of all time.

- I got to meet and befriend a personal hero of mine, Roger Linn.

- I gave one of the keynote addresses at ADC 2018 in London in November.

- AudioKit was honored to have both Synth One and our fundraiser app Digital D1 appear on several “Best Apps of 2018” lists, including Music Radar, Cult of Mac, Ask.Audio, and Stuff.tv.

- We’ve helped dozens of creative apps and aspiring developers reach the App Store this year, helping people with code. And, also promoting their apps on Social Media and this blog.

- Our Audio Developer Interviews began in February, highlighting the work of a wide-range of iOS audio developers.

- The AudioKit Core Team has grown to 20 people and we have over 150 people on our Slack channel.

And you want to know the crazy part? I think we’re just getting started. I believe 2019 will be an even better year for AudioKit than 2018.

AudioKit Synth One featured in all Apple Retail Stores in America in 2018.

AudioKit Synth One featured in all Apple Retail Stores in America in 2018.

First of all, users of AudioKit’s current apps (Synth One and Digital D1) will be getting significant upgrades soon. Plus, we have some killer new apps coming out. I’ll leave the marketing hype up to Matt, but I will say I’m super excited for our upcoming apps.

Thanks to everyone and here’s to a productive 2019!

Very stimulating!

Your priorities for what should be coming this year align with mine, as a user. And they could go a whole long way to make me into more of a coder.

In fact, while Android support would probably generate the most traction for commercial apps, my dream would be to have some of the same tools on Raspberry Pi devices (especially with the Pisound HAT), Bela, iOS, macOS, Windows, and Ubuntu MATE. In a way, Puredata is probably the only way to accomplish this, right now. And it may take quite a while before SOUL allows for processing to be distributed across devices in such a way. But the mere thought of this is quite compelling. Makes me daydream.

And part of this has to do with those priorities, which apply to D1 and Synth One. While it’s possible to run the same Pd code on diverse platforms, Pd doesn’t support MPE or AUv3. It does allow for diverse tuning systems to be used, but not in a consistent way. It has some decent resources for learning and some welcoming groups but the examples end up being insufficient. And there’s nothing like a “playground”.

As an ethnomusicologist, tuning support tends to interest me at an intellectual level more than a concrete one. It remains in my mind as something to explore and some experiences with Samvada or Synth One or Gestrument have been quite fun. But this is actually a space where inspiring examples would really help me. Adaptive tuning in Crudebytes apps is of the same type. It’s really cool in theory and can sound very good. But it remains more of a desire born of the “idea of it”.

Continuous pitch in MPE is quite different. It feels good in practice and it becomes something which splits tools between those which enable me and those which don’t. Playing a non-MPE synth with my ROLI Lightpad M does feel like something is missing.

The “y-axis” of MPE is also very important, to me. Possibly more. Thing is, even synths which support MPE may not have that many patches which use it. The way SynthMaster softsynths deal with CC74 (assigning it to CC1) is pretty neat and does end up producing good results. But, apart from ROLI’s Noise app, we don’t really have the iOS equivalent to ROLI/Fxpansion’s MPE-enhanced desktop softsynths (Equator, Cypher2, Strobe2).

Thankfully, Noise does work as AUv3 for patches based on the Equator engine (or for their drum engine). That’s been very useful. Makes me dream of getting the equivalent with Synth One and D1, which would have the added benefit of allowing for tweaking the sounds. If there were a common space to share patches and enough MPE-enabled users, it could allow us to have an increasing number of MPE-enhanced patches, with all dimensions of control having noticeable and appropriate effects on sound.

Which reminds me of a longstanding “FeatuRequest”: a way to manage/organize/classify/exchange patches across softsynths. In some ways, Audiobus could do something like this. And Native Kontrol System is an interesting model for this on the desktop. But it’d make even more sense if it were supported in an Open Source library like AudioKit. In fact, it could even connect to MIDI-CI and whichever part of MIDI 2.0 deals with communication between devices and apps. Part of the trigger for me was about all the “Virtual Analog” subtractive synths which could share some patch designs. As crazy as it may sound, there’s something to be said about having a common file format for patch/preset files. A kind of JSON/XML for sound design. Haven’t checked how things work on the VST side and there’s might already be something there. But it’s really fun to imagine that we could have a kind of machine-readable open format for patches and presets, allowing for some level of automatic classification (mono/poly, MPE-enhanced or not, type of filter control…).

But, obviously, this is just me thinking out loud.

As you said, 2018 has been a very good year for AudioKit, thanks to everyone’s work. And 2019 does sound promising if we can get MPE, AUv3, microtuning, and learning resources.

Keep up the good work and thanks for sharing your intentions. Almost as much as opening the code, it helps in building a sense of belonging.