Hey Everyone,

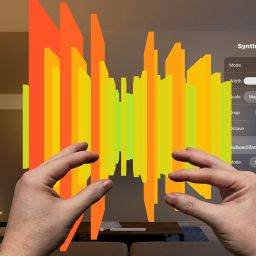

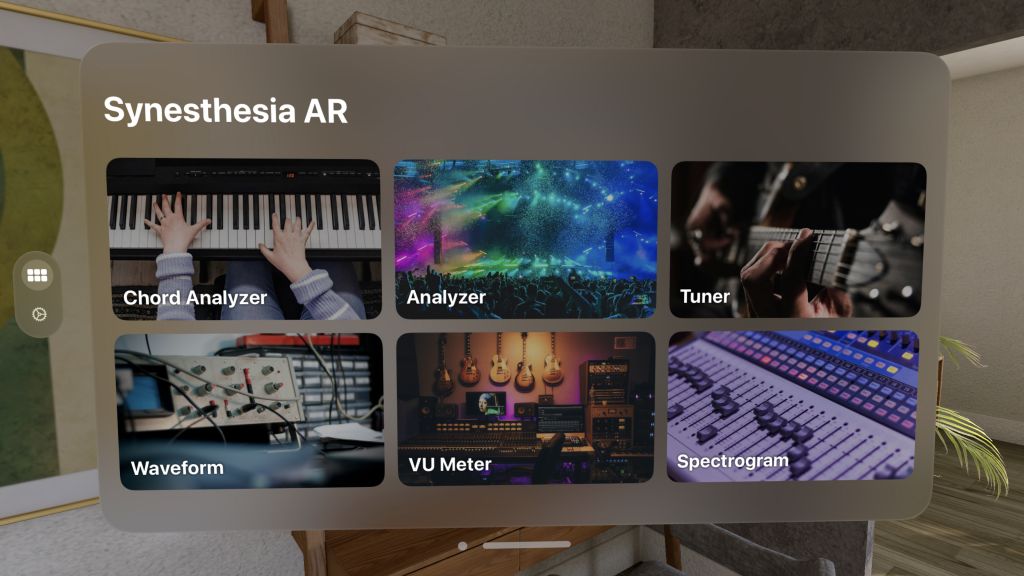

We wanted to share what we’ve been working on for a while now. Aurelius Prochazka, Maximilian Maksutovic, and Taylor Holliday present Synesthesia AR: A toolkit for visualizing sound!

Aure: “The moment the Vision Pro was announced, I knew it was going to change my life forever.”

Max & Taylor were a little more skeptical, but after Aure had the privilege to try an early demo at Apple Headquarters in Cupertino, he made a compelling case for how groundbreaking the tech was.

Aure: “The experience of being able to look at something in my environment, focus on it to select it, was much more natural than tapping or moving your mouse to select it.”

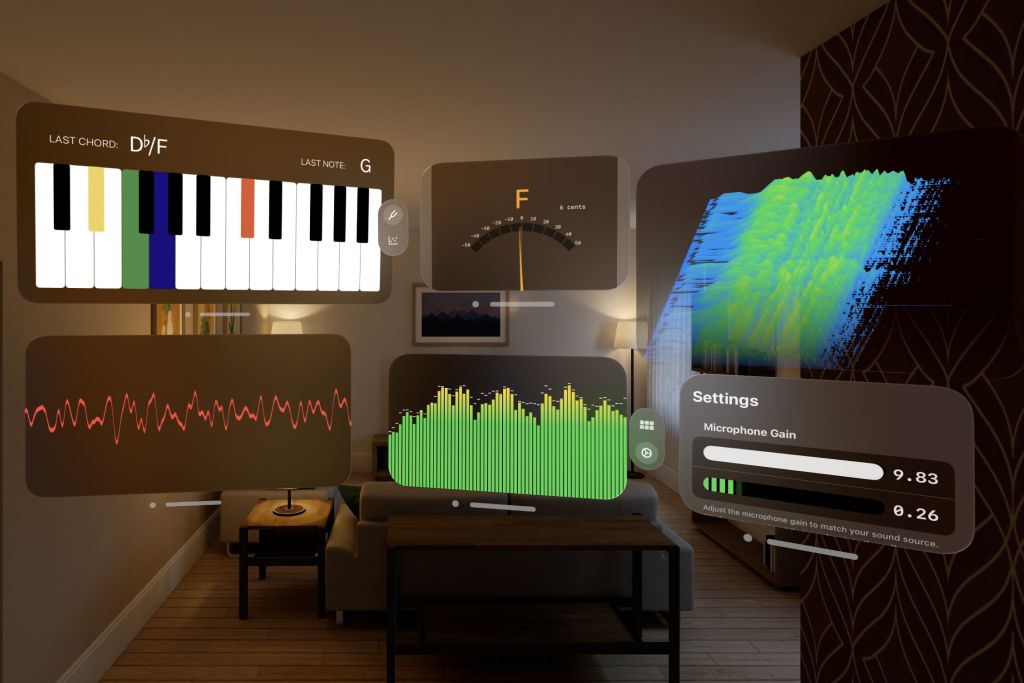

Our intentions for the project were straightforward: we wanted to leverage existing AudioKit technology, we wanted a platform to learn the new VisionOS SDK, and we wanted to utilize the special nature of spatial computing as much as possible.

We ended up converting components in AudioKit to work natively in VisionOS. We’ve also been busy doing AI experiments in audio (with big thanks to NeuralNote and Spotify’s Basic Pitch), which we really wanted to showcase in Synesthesia AR.

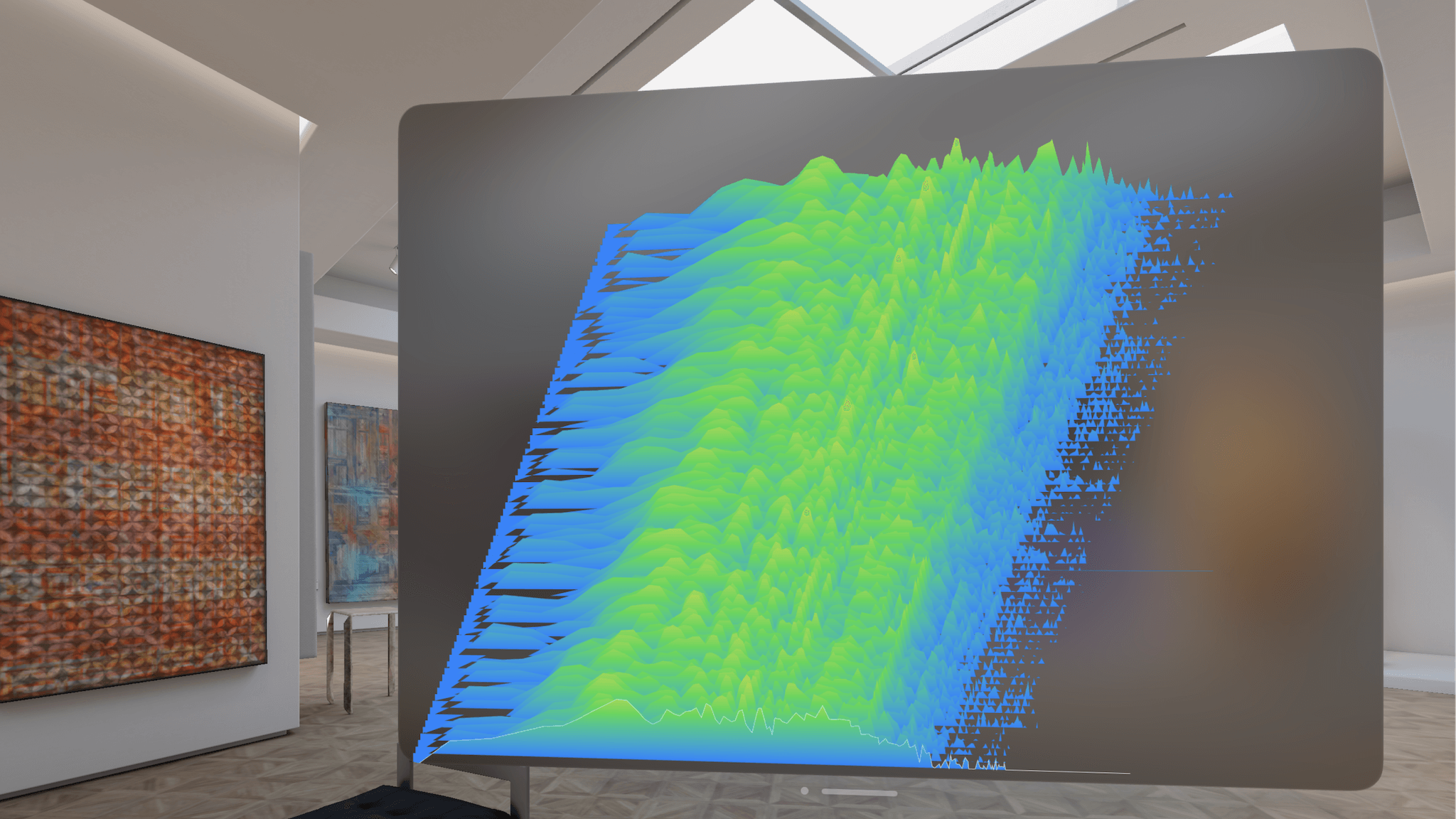

Maximilian: “We hope that this app can serve as a repository for a lot of the different ideas & prototypes we are working on, with a focus on the spatial aspect of VisionOS. I personally have a lot to learn about ARKit, and hope we can start to augment AudioKit components to work in 3D. Imagine flying through a Spectrogram, or using the advanced hand tracking to scrub through a waveform Minority Report style—this is what I want us to bring to further updates.”

We’d love to hear your feedback and look forward to adding more features in the coming months.

Synesthesia AR webpage

NOTE: Requires Vision Pro hardware